Programming The iPhone For Accessibility By The Visually Impaired

Per Busch, a blind iPhone user from Germany, has been on a crusade to raise developer awareness about VoiceOver, a new-in-3.0 accessibility enhancement.

Per’s is a noble quest, so we’ll do our part here:

VoiceOver Over View

VoiceOver, says Apple, “describes an application’s user interface and helps users navigate through the application’s views and controls, using speech and sound.”

Apple offers a concise document that describes how accessibility is delivered with iPhone 3.0. I’ll further distill what’s involved:

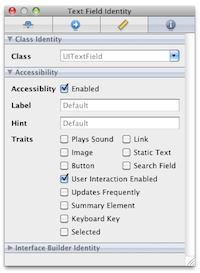

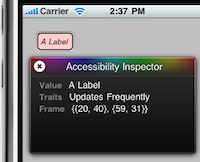

VoiceOver literally describes what’s on-screen in spoken word. As an app developer, you provide the descriptions by labeling your UI elements; providing a string hint about the element; and adding traits that describe the state, behavior or usage of the element.

You can label your UI from an inspector panel in Interface Builder. To test the app’s accessibility, you can either turn on VoiceOver or use the Accessbility Inspector.

Supporting this level of accessibility isn’t hard. Twenty minutes ago I’d never used these capabilities.

Standard UIKit controls/views support accessibility by default. The UIAccessibility informal protocol enables adding these capabilities to custom controls.

Limitations, And A Wish-List For Apple

Per shared an email conversation with Daniel Ashworth, CEO & Chief Architect of Quokka Studios about the limitations of the VoiceOver. With his permission, I’ll share Daniel’s comments here:

As I mentioned in a tweet, there are issues in implementing accessibility for the iPhone in third party apps. Let me explain with reference to one of our apps.

FluxTunes [link added by me] is an app that allows you to control your music using gestures anywhere on the touchscreen, eg tap to play/pause, a swipe to the right to advance to the next track, swipe to the left to go to the

previous track, two finger swipes to change playlists, etc.Sounds like it may be ideal for a blind user who is tired of hunting through the controls in the standard player using VoiceOver and wants a customised experience that is actually a pleasure to use? The problem is that Apple has provided such limited hooks into the accessibility features of the iPhone that it’s impractical to make this application properly accessible at present.

The problems:

1. If VoiceOver or Zoom is on, then the app is unable to receive many touch events- the OS simply fails to pass on most events to the application. So an app that uses gestures is immediately unable to function at all with VoiceOver or Zoom active.

2. A fully resolved accessibility solution should allow an application to customise the behaviour of VoiceOver to suit the application. I suspect that the full accessibility solution in place on the iPhone would permit this. The problem is that Apple has opened up such a small part of accessibility for third party developers at present that it’s not possible for them to customize its behaviour as it can be for built-in apps.

3. Maybe we could get users to temporarily disable VoiceOver while using the application and have the application handle voice synthesis to provide feedback? Unfortunately not practical- its obviously not ideal for the user to have to switch VoiceOver on and off while switching apps, but more significantly Apple haven’t to this point opened up the text to speech APIs to third party developers. These are clearly in place in the OS, since they’re used by VoiceOver, but without these being open, text to speech would at best require embedding large additional libraries to replicate a functionality that’s already present on the phone. We’d like the ability to announce tracks even for sighted users without VoiceOver active, especially when they’re in circumstances when they shouldn’t be looking at the touch screen, eg when driving. In those circumstance we should essentially be treating all users as unsighted. So there’s a safety consideration for sighted users also.

So here’s my wish list as a third-party developer:

1. The means to get all touch events, even if VoiceOver/Zoom is active

2. The ability for an app to control when VoiceOver/Zoom is active when it’s running. It may be appropriate in some screens but not others.

3. Open access to the text to speech APIs

4. Better access to customise the behaviour of VoiceOver/Zoom.

I believe that with better support in place along these lines, significantly more third party applications could be made accessible or improve their accessibility. Obviously as well there are many apps that could implement VoiceOver support, i.e those based around standard controls, but aren’t necessarily doing this or doing it well.

Apple have done a good job of making their own apps accessible, but to this point they haven’t yet provided a full toolset for third party developers. I welcome any moves Apple does make to improve the toolset available- they’ve made moves with successive OS releases to open up

more features, so I hope that this will be the case here also.

The Business Case For Accessibility

Reasons for making applications accessible need not be exclusively altruistic:

My mother is disabled; to the extent that we can use technology to try to get her near parity we’re willing to pay for it it. This is certainly worth a premium, though it is normally the case that these bits of tech are obscenely priced; part of it has to do with the comparatively small population of buyers (as compared to e.g., the audience for DVD players) and a larger part to do with having a captive audience that can be taken advantage of.

Just like the $5 iPhone guitar tuner app obsoletes the much more expensive stand-alone guitar tuner, apps focussed on accessibility can demand a premium over the ringtone price-level.

Well, I almost agree with everything Daniel Ashworth (I believe it was) said. Although with the new feature in OS 3.1 to set the tripple click of the home button to toggle VoiceOver on and off now makes it simple to turn VO off and use an app like “FluxTunes” the way you ment it. Its not a “fix all” but a step in the right direction never the less. I am blind and would rely on VO on the iPhone, and any atempt to make an app accessible is usually always a step in the right direction. Great article guys, and hope this gives some developers some new ideas! 🙂

Many thanks for featuring this topic. Apple’s “Accessibility Programming Guide for iPhone OS” seems to be a good way to make third-party apps VoiceOver compatible.

My unanswered question is: Are there any realizability problems or other disadvantages for third-party developers which keep them away from following Apple’s iPhone accessibility instructions?

Apple could perhaps turn following these instructions into another requirement for app store approvals in the future. Dear developers, please dare, research the issue and try to implement accessibility wherever it is possible. I and many others would also like to learn about your opinions. Please code with accessibility and usability on your mind, make the iPhone miracle for blind and otherwise handicaped people the best imaginable mobile solution.

You can find out more about VoiceOver on the iPhone 3GS, media coverage and the growing blind iPhone/iPod community at:

https://blind.wikia.com/wiki/IPhone

Great article. I am forwarding the the link to my friend, Gordon Fuller, who is a filmmaker and co-founded a virtual reality company. Why is this relevant? Well, Gordon is also blind and we’re looking at how social networks and augmented reality can aid in wayfinding.

I met with a couple of the accessibility presenters at WWDC’2009 and came away with the impression that they are open to improvements. I asked them point blank if they were working full time on accessibility. The answer was yes! This is quite a positive change from a few years before (pre-iphone) when the responsibility for accessibility was more diffuse.

Accessibility features is very useful for sighted individuals, as well. I am sighted but use the ctrl-alt-apple-8 key all the time on my mac to reverse the screen. Another use is for driving a car, where looking at screen can result in an accident. A third is for augmented reality, where anyone can leave a comment about a space. Ironically, blind users who are used to speed listening (where the speed of the audio playback is increased) may have an advantage over sighted people in socializing in the verbal web.

My top 3 suggestions are:

1) speech to text and control accessibility state – already commented on

2) selective color inverse – not simply inverting the RGB values

3) Access to TV-out

The issue with 2 is that the iPhone already uses a lot of white text on black background, which is ideal. That means a simple reverse makes the controls less accessible, not more.

The access to TV-out is based on my experience watching Gordon use a PC, over the years. He connects a PC to a large screen TV and zooms the text to where each letter is the size of my thumbnail. The problem is that it is easy to get lost. It seems that the 320×480 display on the iPhone attachable to any large screen would allow semi-blind internet surfing away from home. The fact that all apps target such a small form factor means that many people could use iPhone apps on a large screen with less manual scrolling.

So, there’s my wish list. I have already filed bugs with Apple. And please file your wish list to apple at: https//bugreport.apple.com . Treat each wish as a separate bugreport. Apple has acted on these in the past.

>My unanswered question is: Are there any realizability problems or other >disadvantages for third-party developers which keep them away from >following Apple’s iPhone accessibility instructions?

I have an a translation app that just made it to the store and I tried to make it accessible but it seems like there are some pieces missing from the accessibility API. I have a UIPickerView with multiple segments but there doesn’t seem to be any way to add a hint for each segment. I also have a segmented control where the segments contain images, not words. There doesn’t seem to be any way to set the accessibility properties on the segments. I found a workaround but that looks pretty fragile and I didn’t get it to work with traits (to mark the button disabled).

https://junecloud.com/journal/code/voiceover-tips-for-iphone-developers.html

The other problem I have is that I don’t have a device that supports VoiceOver so the only way I have to test is using the Accessibility Inspector in the simulator. If I knew how the app behaved with voiceover I could make better choices about writing hints. For instance, selecting a new language with the picker changes a text label. Is that label read automatically when it changes? If so, then that makes the picker a lot easier to understand.

Also, how does Voiceover handle foreign text? When I tried it on the mac it did a terrible job because it treated everything as English. Is there a way to specify the language for a UITextView? This issue plus the lack of copy/paste makes me think the app is probably rather useless for VO users anyway.